We are currently living through a “perfect storm” in the data infrastructure world, a convergence of trends that is putting unprecedented pressure on IT budgets.

On one front, the sheer gravitational pull of data demand is becoming exponential. We are past the era of simple file storage. We are now in the age of consolidating virtual machines and containers, generative AI training sets measured in hundreds of terabytes, high resolution volumetric video, relentless IoT sensor logging, and modernized enterprise backup strategies that require instant recovery capabilities. The world is generating petabytes of data that doesn’t just need to be stored; it needs to be instantly accessible, highly performant, and “always on.”

On the other front, the underlying economics of the hardware required to store that data have turned hostile. For nearly a decade, IT leaders got used to a comfortable trend: flash prices went down, and density went up. We took it for granted.

That trend has violently reversed.

We have entered an era of the “Great Storage Squeeze.” The cost of NAND flash (SSDs) is rising sharply, driven by calculated production cuts by major manufacturers and supply chain constraints. Simultaneously, the cost of DRAM is skyrocketing, driven by the insatiable appetite of AI GPU servers gobbling up high performance memory (HBM) and the industry transition to more expensive DDR5 standards.

If you are relying on traditional storage architectures designed fifteen or twenty years ago, this squeeze isn’t just uncomfortable, it’s financial anemia.

In this new economic reality, efficiency is no longer just a “nice to have” feature on a datasheet; it is the single most critical metric for Total Cost of Ownership (TCO). If your architecture wastes flash or squanders RAM, your budget is bleeding.

This post will take a deep dive into why legacy “shared everything or nothing,” dual controller architectures are financially disastrous in the current market, and how VAST Data’s unique Disaggregated Shared Everything (DASE) architecture and revolutionary Similarity Engine offer the only viable economic escape route for petabyte scale organizations.

The Legacy Trap: Why Dual Controller Architectures Can’t Survive the New Economics

For over two decades, the “shared everything ” HA pair (high availability dual controller) has been the standard Lego brick of enterprise storage. You buy a physical chassis containing two controllers for redundancy, and a set of drives plugged into the back.

Those two controllers “own” those drives. No other controller in your data center can touch them.

This “shared everything” approach worked perfectly fine when enterprise data sets were measured in tens of terabytes. But as organizations scale into multiple petabytes, this architecture introduces massive, expensive inefficiencies that are magnified tenfold by rising component costs.

The Silo Problem and the Curse of Stranded Capacity

Traditional architectures scale by adding more “silos.” If you fill up Array A, you must buy Array B. If you fill up Array B, you buy Array C. If you need more performance than what the dual controllers can provide, yes, you guessed it properly, you add another dual controller cluster..

The fundamental economic flaw is that these arrays are islands. They do not share resources.

Imagine you have ten separate legacy arrays. It is statistically improbable that all ten will be perfectly utilized at 85%.

- Array A, hosting a mature database, might be 95% full, constantly triggering capacity alarms.

- Array B, bought for a project that got delayed, might be sitting at 20% utilization.

In a legacy world, Controller Pair A cannot utilize the stranded capacity trapped behind Controller Pair B. You have terabytes of expensive flash sitting idle in one rack, yet you are forced to issue a purchase order for more expensive flash for the adjacent rack simply because the empty space is trapped in the wrong silo.

Industry analysts estimate that in large scale traditional environments, 25% to 35% of total purchased flash capacity is perpetually stranded due to imperfect silo balancing. When SSD prices are skyrocketing, paying for 30% more flash than you actually use is a massive, unacceptable tax on your organization.

The “Dirty Little Secret” of Legacy Data Reduction

This is perhaps the most critical financial deficiency of traditional architectures in a multi petabyte environment, yet it is rarely discussed openly by legacy vendors.

Every vendor touts their deduplication and compression capabilities. They promise 3:1, 4:1, or sometimes 5:1 data reduction ratios. But there is a massive caveat hidden in the architectural fine print: Deduplication is bounded by the cluster boundary.

Because traditional architectures are based on independent silos, they only “know” about the data within their specific domain. They have no global awareness.

The Multi Cluster Duplication Disaster: A Real World Scenario

Let’s visualize a very common enterprise workflow to understand the scale of this economic waste.

- Production: You have a primary, high performance legacy all-flash array (Cluster A) holding 1PB of critical production data.

- Disaster Recovery: You require a remote copy. You replicate that 1PB to a secondary cluster (Cluster B) at a different site.

- Dev/Test: Your development teams need realistic data to work with. You spin up a third cluster (Cluster C) and clone the production environment for them.

- Analytics: Your data science team needs to run heavy queries without impacting production. You extract that data to a fourth data lake cluster (Cluster D).

In a traditional, shared nothing world, Cluster B, C, and D have absolutely no knowledge of the data sitting on Cluster A.

Even though the 1PB of data across all four sites is 99% identical, every single cluster will re-ingest it, re-process it, re-hash it, and store it as unique physical blocks.

The Economic Reality: You have 1PB of actual corporate information, but you have purchased and are powering 4PB of expensive flash to store it.

In a world of rising SSD costs, this inability to deduplicate globally across your entire environment is a financial catastrophe. It forces you to buy the same expensive terabyte over and over again.

The Paradigm Shift: VAST Data’s Disaggregated Shared Everything (DASE)

To solve an efficiency crisis of this magnitude, you cannot just tweak the old model with faster CPUs. You have to break the architecture completely.

VAST Data realized that the shared nothing, dual controller approach was a dead end for petabyte scale. Instead, VAST built the DASE (Disaggregated Shared Everything) architecture from the ground up to align with modern hardware realities.

How DASE Breaks the Silos

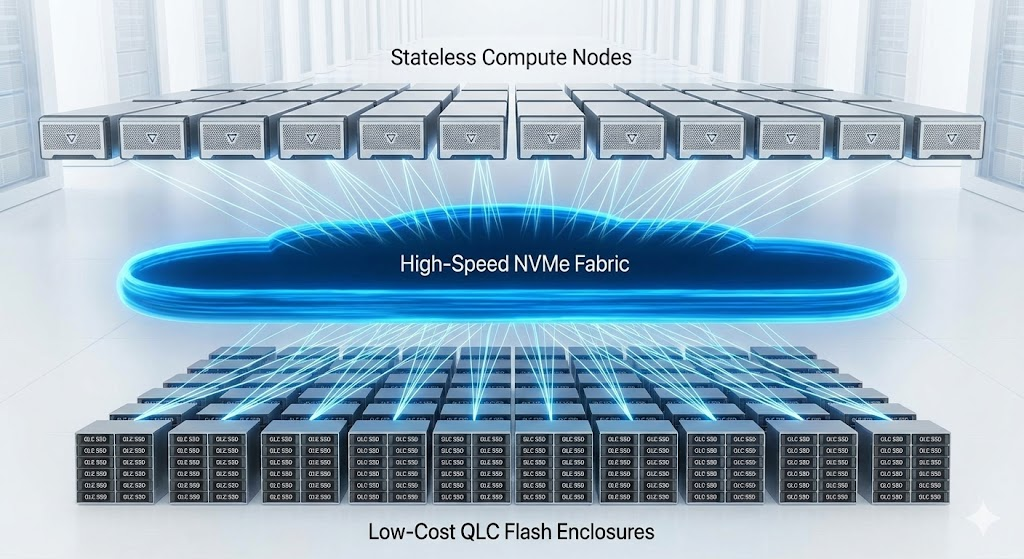

DASE fundamentally separates the “brains” of storage (compute logic) from the “media” (persistence).

- The Compute Layer (The Brains): These are stateless Docker containers running on standard servers. They handle all the complex logic—NFS/S3/SMB/Block protocols, erasure coding, encryption, and data reduction. Crucially, they hold no persistent state. If a node fails, another one instantly takes over without a long rebuild process. You can scale performance linearly just by adding more stateless containers.

- The Persistence Layer (The Media): This is a giant, shared pool of highly available NVMe JBOFs (Just A Bunch Of Flash). These enclosures contain no logic, only media. They hold a mix of expensive, ultra fast Storage Class Memory (SCM) for write buffering and metadata, and dense, affordable QLC flash for long term storage.

- The Interconnect (NVMe-oF): A high speed, low latency Ethernet fabric connects everything to everything.

The crucial difference that changes the economics: Every single compute node can see and access every single SSD in the entire cluster directly over the network at NVMe speeds.

Why DASE is an Economic Fortress

Because everything is shared, there are no silos. There is absolutely no stranded capacity.

The entire cluster is one single pool of storage. If you are at 70% capacity, you are at 70% utilization across every drive. You never have to over provision one resource just to get more of the other.

Furthermore, DASE unlocks the economic potential of QLC Flash. QLC (Quad Level Cell) flash is significantly denser and cheaper than the TLC flash used by most legacy arrays. However, QLC has low endurance—it wears out quickly if you write to it randomly, the way legacy controllers do.

VAST’s DASE architecture uses the ultra fast SCM layer to absorb all incoming writes, organizing them into massive, perfectly sequential stripes before laying them down gently onto the cheap QLC flash. This allows VAST systems to use low cost QLC for 98% of their capacity while offering a 10 year endurance guarantee, something legacy architectures simply cannot achieve.

The Secret Weapon: The VAST Similarity Engine

We established that rising SSD costs make capacity efficiency paramount. But we also established that rising RAM (DDR5) costs are painful.

Traditional deduplication is terrible at both.

- It’s Fragile (Bad Capacity Efficiency): Old school dedupe breaks data into fixed blocks (e.g., 8KB). It creates a mathematical “hash” (a fingerprint) of that block. If a single bit changes in that 8KB block, the hash changes completely, and the dedupe fails. It only catches exact matches. It fails miserably on encrypted data, compressed logs, or genomic sequencing data where blocks are almost identical but not perfect matches.

- It’s RAM Hungry (Bad Memory Efficiency): To know if a block is a duplicate, the storage controller must keep a massive table of every single hash it has ever seen. Where does that table live? In incredibly expensive, fast DRAM. As your data grows to petabytes, the required DRAM table grows linearly, becoming prohibitively expensive.

Enter Similarity Based Data Reduction

VAST didn’t just rebuild the hardware architecture; they reinvented data reduction for the modern era.

The VAST Similarity Engine doesn’t just look for exact block matches. It looks for similar blocks.

Using advanced algorithms derived from hyperscale search engine technology, VAST breaks data down into very small chunks and compares them against “reference blocks” already stored in the system. If a new block is 99% similar to an existing block, VAST compresses it against that reference block, storing only the tiny delta of differences.

The Twin Economic Benefits of Similarity:

- Far Better Reduction (Saving SSDs): Similarity works amazingly well on data types that traditional dedupe gives up on—like voluminous log files, machine generated IoT data, genomic data, and even pre encrypted backup streams. VAST routinely achieves dramatic data reduction on datasets considered “uncompressible” by legacy vendors.

- Massive RAM Savings Because Similarity doesn’t rely on a rigid, massive hash table of every single 8KB block, it requires a fraction of the DRAM that legacy systems need to manage petabytes of data. The metadata footprint is radically smaller. In an era where filling a server with DDR5 memory can cost as much as the CPUs, this is a massive cost advantage.

The Global Data Reduction Knockout Punch

Remember the “Multi Cluster Disaster” scenario where you paid for 4PB of flash to store 1PB of data across Production, DR, Dev, and Analytics?

Because VAST DASE is a single, scalable global namespace that can grow to exabytes without performance degradation, you never have that problem.

Whether you have one PB or fifty PBs, it is all managed by one loosely coupled DASE cluster. The Similarity Engine sees everything.

If you create a clone of your 1PB production database for testing, VAST recognizes it instantly. It doesn’t copy the data. It just creates pointers. Even as the test team modifies that data, the Similarity engine only stores the tiny unique changes.

With VAST, you store 1PB of information once. Period. You don’t pay the “silo tax” ever again.

Conclusion: The Economics Have Changed. Will You?

The era of cheap flash and abundant, inexpensive RAM masking inefficient storage architectures is over. The storage market has entered a new phase of harsh economic reality defined by supply scarcity and exploding demand.

Sticking with traditional dual controller architectures in this environment means voluntarily accepting stranded capacity, paying for duplicate data copies across multiple silos, and buying excessive amounts of overpriced RAM just to manage inefficient legacy dedupe tables.

VAST Data’s DASE architecture and Similarity Engine were designed specifically for this petabyte scale reality. By breaking down physical silos through Disaggregated Shared Everything, and by reinventing data reduction to be both globally aware and radically RAM efficient, VAST doesn’t just offer better technology.

It offers the only viable economic path forward for large scale data infrastructure in the 2020s. Stop paying the legacy tax.

Leave a Reply